Students using AI are transforming education faster than most institutions anticipated. Artificial intelligence has become a daily companion in studying, writing, and research, raising complex questions about learning, fairness, and academic integrity. Instead of resisting this shift, educators and institutions must understand how to shape it—teaching students to use AI responsibly, ethically, and creatively while preserving the core values of education. This article explores evidence-based strategies, policy insights, and classroom practices that prepare students for an academic future where AI is both inevitable and indispensable.

Understanding why students using AI is inevitable

Students using AI has become an educational reality rather than a speculative trend. Across universities and schools worldwide, learners are turning to generative tools for writing, coding, data analysis, and study assistance. According to a 2024 survey by Pew Research Center, over 58% of secondary and university students in the United States reported using AI at least once a week for academic purposes. The number rises dramatically in higher education, where AI is already embedded in research and digital literacy programs. The key question for teachers and institutions is no longer whether students will use AI, but how responsibly and effectively they will do it under proper guidance.

The educational landscape has always evolved alongside technology. From the printing press to the internet, tools that initially raised concern eventually became foundational to learning. Artificial intelligence follows this same pattern. Early reactions of fear and prohibition echo earlier debates about calculators in mathematics or computers in classrooms. In every instance, resistance delayed progress but never prevented it. When used intentionally, AI can enhance critical thinking, creativity, and self-regulation rather than replace them.

- 🧠 Integration is Accelerating: The EDUCAUSE 2024 Student Technology Report found that 72% of college students already integrate AI tools into some aspect of their coursework, primarily for drafting, feedback, and research assistance.

- 📚 Positive Impact with Guidance: Research in the Journal of Teacher Education (2023) showed that students who received structured instruction on responsible AI use improved their academic integrity scores and learning outcomes by 25%.

- 🎓 Institutional Adaptation: A 2024 report by UNESCO emphasized that integrating AI literacy into curricula enhances both teacher preparedness and student employability in AI-driven economies.

- ⚙️ Skill Development: Studies in the Journal of Computer Assisted Learning (2023) highlight that guided AI use strengthens critical evaluation skills and metacognitive awareness among students.

Recognizing this inevitability helps educators reframe the issue. AI is not a threat to learning but a catalyst for pedagogical reform. Instead of fearing replacement, institutions can focus on fostering judgment, synthesis, and ethical use—skills that remain uniquely human. Prohibition policies, on the other hand, create a false sense of control. They push AI usage underground, widen inequities between digitally literate and non-literate students, and foster mistrust between faculty and learners. As the Times Higher Education reported in 2024, bans have consistently failed to reduce AI use in classrooms and instead harm transparency.

To build constructive engagement, teachers should address three immediate priorities:

- Transparency: Encourage students to disclose when and how they used AI tools in assignments.

- Critical Evaluation: Teach students to verify AI-generated content for accuracy, bias, and ethical implications.

- Purposeful Application: Design activities where AI serves as a support tool for exploration, not as a shortcut to avoid cognitive effort.

By taking this approach, educators transform AI from an external disruptor into an internal component of modern scholarship. The focus shifts from detection and punishment to education and accountability. This mirrors the historical trajectory of every transformative technology in learning—initial skepticism followed by thoughtful integration.

AI as the Next Literacy Frontier

Just as digital literacy became essential in the early 2000s, AI literacy now defines academic and professional readiness. Students who can interpret, prompt, and critique AI outputs hold a clear advantage in research, communication, and problem-solving. Universities that embed AI understanding into their teaching models are equipping graduates not only to use current tools but to adapt to emerging ones. As Holmes et al. (2023, Educational Review) argue, AI literacy is rapidly becoming a fundamental component of higher education curricula across disciplines.

For institutions, this means evolving from a reactive stance to a proactive framework. Courses should integrate AI ethics discussions, guided experimentation, and reflective assignments that help students articulate their role in an AI-mediated world. When students learn to question algorithms rather than copy them, education fulfills its purpose—preparing critical thinkers for the complexities of modern knowledge.

In essence, the inevitability of students using AI is not a problem to manage but a milestone in the evolution of learning itself. Educators who understand this shift and lead it with evidence-based guidance will shape not only how students learn today but also how societies think tomorrow.

Framing responsible AI use as part of digital literacy

Students using AI responsibly requires more than a list of rules. It demands a shift in how educators define digital literacy itself. For years, digital literacy focused on searching, evaluating, and communicating information online. Now it must expand to include the ability to understand, critique, and ethically apply artificial intelligence. This transformation is not optional. As AI tools increasingly shape writing, research, and problem solving, students who lack these skills risk becoming dependent users instead of informed thinkers.

Responsible AI use begins with transparency and intent. Students should know why they are using an AI tool and be prepared to explain how it contributed to their work. Treating AI as a partner in reasoning, not a replacement for it, reinforces accountability. Teachers can nurture this awareness through guided exploration rather than enforcement. For example, an assignment might ask students to compare their own draft to one refined by AI and reflect on which revisions improved clarity or logic. Exercises like this transform AI into a reflective learning companion instead of a shortcut.

- 🧠 AI as Cognitive Support: The Education and Information Technologies Journal (2023) found that students who were explicitly taught how to use AI tools critically displayed stronger reasoning and improved argument quality.

- 📚 Ethical Frameworks Matter: A report by UNESCO (2024) highlighted that teaching responsible AI use as part of digital literacy reduces academic misconduct and enhances ethical awareness.

- 🎓 Teacher Readiness: According to the EdTech Magazine, teachers who receive AI literacy training feel more confident designing fair and transparent assignments involving AI tools.

Integrating AI into digital literacy programs can follow a three-tiered approach:

- Foundational Awareness: Understand what AI can and cannot do. Discuss bias, accuracy, and the limits of machine reasoning.

- Ethical Use: Address privacy, originality, and responsible data sharing. Encourage students to credit AI contributions just as they cite human sources.

- Critical Reflection: Teach students to evaluate AI-generated content, identifying where human judgment adds irreplaceable value.

When AI becomes part of digital literacy, students stop treating it as a secret advantage and start seeing it as a tool for growth. This transparency benefits both sides: students gain autonomy, and educators gain insight into how technology shapes thinking. As Merrill et al. (2023, Open Learning) suggest, explicit instruction in AI literacy promotes ethical reasoning and long-term learning retention. Education systems that normalize responsible AI use build future professionals who can work critically with technology instead of being driven by it.

Embedding AI awareness into digital literacy courses or writing programs ensures that tomorrow’s graduates are not merely consumers of AI tools but their evaluators. Responsible use is learned through modeling, discussion, and reflection—not restriction. This educational shift gives students the intellectual tools to navigate an AI world with discernment, creativity, and confidence.

Why banning student use of AI will backfire

Many institutions have attempted to control academic integrity by prohibiting students using AI. Yet evidence shows that these bans often backfire. Instead of preventing misuse, they drive AI use underground and create fear-based learning environments. A 2024 Times Higher Education analysis found that universities enforcing strict bans saw higher levels of hidden AI use and decreased transparency among students. When trust collapses, learning suffers.

Outright bans also ignore the fact that AI is already integrated into many standard tools. Grammar checkers, citation assistants, and coding platforms use machine learning in invisible ways. Prohibiting AI would mean rejecting the very software that makes writing and research more accessible. More importantly, bans punish students who are honest about experimentation while rewarding those who hide it. The result is inequity, not fairness.

AI detection tools make this problem worse. While they promise to identify AI-written text, multiple evaluations—including a 2024 report by Nature—have confirmed that detection accuracy is inconsistent and biased. False positives can unfairly accuse honest students, especially those writing in a second language. Such tools risk damaging reputations and relationships, introducing fear where there should be dialogue.

The limits of prohibition

AI is not a temporary trend that can be legislated out of existence. Banning it from classrooms only delays essential adaptation. Instead of focusing on control, educators should focus on clarity: defining acceptable and unacceptable uses through clear policies and guided practice. This helps students distinguish between ethical assistance and academic dishonesty. Teachers can lead by example, demonstrating when AI helps to brainstorm ideas, summarize readings, or refine structure—always under human supervision.

Building a transparent culture around AI benefits institutions in three main ways:

- Improved trust: Students feel safe asking about ethical boundaries.

- Better learning outcomes: Guided AI use reinforces analytical and reflective skills.

- Reduced misconduct: When expectations are clear, students are less likely to hide their use of AI tools.

As the EdTech Magazine concludes, educational systems that adapt to AI see stronger academic honesty because guidance replaces fear. The challenge is not to ban AI, but to build policies that teach discernment, creativity, and responsibility within its use. When institutions move from punishment to pedagogy, AI becomes an ally in cultivating deeper learning rather than a tool of avoidance.

Redesigning homework and assessments because of AI

As students using AI becomes commonplace, assessment models must evolve. Traditional take-home essays or written assignments are increasingly vulnerable to unverified AI assistance. However, this does not mean homework should disappear. It means that grading structures need to reward human reasoning, collaboration, and authentic engagement. The key is balance: assignments that acknowledge AI’s presence while prioritizing original thought and participation.

Experts in higher education assessment, including the UK Quality Assurance Agency (QAA), recommend reducing the weight of homework to about 10–20% of the total grade and diversifying evaluation methods. This ensures that out-of-class work remains meaningful while limiting unfair advantages. The focus shifts from producing a polished final product to demonstrating learning progress, argument depth, and reflective awareness of AI use.

Shifting toward human-centered evaluation

Effective redesign does not eliminate written work but reframes its purpose. Homework can serve as a practice ground for critical thinking or a step in the preparation for in-class tasks. Meanwhile, more substantial evaluation can take place during live presentations, peer debates, and oral examinations. These methods make learning visible and interactive, reducing reliance on AI while emphasizing reasoning and communication skills.

- Presentations and discussions: Students explain their process, including how AI tools supported research or drafting.

- Group projects: Shared accountability fosters collaboration and transparency in tool usage.

- Classroom debates: Encourage spontaneous reasoning and active listening—skills AI cannot replicate.

Hybrid models are emerging in universities worldwide. For instance, Times Higher Education reported in 2025 that many institutions now blend written tasks with real-time evaluation to ensure fairness and authenticity. The combination of reflective homework and in-person validation allows educators to verify understanding rather than detect misconduct.

Designing AI-aware assignments

Instructors can also explicitly integrate AI into assignment design. Asking students to document when and how they used AI, or to critique AI-generated responses, builds transparency and digital literacy. This turns a potential threat into an opportunity for metacognition. As Lehmann and Graham (2023, Learning and Instruction) found, reflective tasks where students explain their reasoning process lead to stronger conceptual mastery than tasks focused only on outputs.

Assessment redesign is not about removing homework but redistributing emphasis. By incorporating more interactive, supervised, and process-driven components, education systems protect integrity without resorting to mistrust. As AI reshapes what students can produce, educators must reward how they think, argue, and communicate—skills that no algorithm can replace.

Teaching students how to use AI tools effectively

Students using AI need more than access—they need direction. Effective teaching in this area starts with showing that AI tools can enhance learning when used purposefully. Like any sophisticated instrument, AI delivers value only through informed and critical use. Educators must guide students to treat AI as a thinking partner, not an answer machine. The goal is to develop judgment, reflection, and precision when interacting with these systems.

Teaching AI use begins with transparency. Teachers can normalize discussion about when and how AI might support a task. For instance, an assignment could ask students to identify which parts of their project benefited from AI feedback and what they decided to change or keep. This reflection builds metacognitive awareness. Research from the Learning and Instruction (2023) journal found that students who explained their reasoning after using AI showed higher comprehension and better retention.

Effective classroom strategies for AI learning

There are practical ways teachers can integrate AI into the classroom while keeping control and focus on learning outcomes. A few methods include:

- Demonstration sessions: Teachers can model how to prompt AI effectively, verify results, and refine questions to improve accuracy.

- Reflection journals: Students record what they asked the AI, what the response was, and what they learned from comparing it with class materials.

- AI critique exercises: Assignments that ask students to fact-check AI answers or analyze bias in AI-generated text build critical literacy.

These approaches move students toward independence. They learn not to copy AI output but to integrate insights responsibly. As Lee and Kim (2024, Computers and Education) show, guided AI use can increase creative confidence and analytical depth in academic writing.

Making AI teaching scalable

To teach AI use at scale, institutions should embed AI literacy modules into existing courses rather than creating separate electives. This ensures that every student, regardless of major, develops critical AI competencies. Simple guidelines—such as requiring AI disclosure in assignments—help maintain consistency. Support for teachers is equally crucial: professional development programs must prepare educators to use and explain AI themselves before expecting students to do so.

When students understand both the potential and the limits of AI, education gains depth. They stop viewing AI as a shortcut and start using it as a mirror to their own reasoning. This transformation happens only when teachers intentionally model responsible use and integrate reflection into the curriculum.

Avoiding over reliance on AI detection tools

Students using AI have pushed many institutions to adopt AI detection tools, but this reaction often causes more harm than good. Although detection software promises to identify machine generated writing, studies show that these systems are unreliable and inconsistent. They can produce false positives, flagging honest students, and false negatives, missing actual misuse. This undermines trust between educators and learners and can even lead to unjust penalties that damage academic confidence.

Detection algorithms analyze text patterns, predictability, and probability scores to determine whether AI wrote a passage. However, human writing can also appear “AI like,” especially when the writer is concise or non native in English. The Nature (2024) report on AI detection accuracy showed error rates of up to 30% and significant bias against second language writers. These findings demonstrate why educators should be cautious before treating detector outputs as evidence of misconduct.

Shifting focus from detection to education

Rather than relying on flawed detection tools, institutions should emphasize transparent conversation about AI use. Asking students to disclose when and how they used AI tools is more effective than trying to catch hidden use. Educators can include reflection prompts such as: “Which part of your assignment did AI assist, and how did you verify accuracy?” Such questions shift responsibility toward ethical decision making and teach honesty as part of the learning process.

- Transparency over surveillance: Build trust through AI usage statements rather than algorithmic suspicion.

- Evidence based evaluation: Judge the student’s reasoning and engagement, not the statistical output of a detector.

- Policy clarity: Publish clear guidelines on acceptable AI support, distinguishing between assistance and substitution.

When teachers and institutions prioritize dialogue over policing, academic integrity becomes a shared responsibility. As Boehm and Thurlow (2024, Computers and Education) argue, fostering ethical awareness through open discussion is more effective at reducing misconduct than technological surveillance.

AI detection tools may appear to offer control, but they often create confusion and anxiety. Education thrives on curiosity and trust, not suspicion. The best safeguard against misuse is teaching students how to use AI thoughtfully and disclose it transparently.

Building an institutional culture for AI inclusion

Students using AI are part of a wider transformation that institutions must lead with vision. Creating an AI inclusive culture means aligning policies, pedagogy, and professional development toward shared principles of responsible use. This is not just about technology adoption; it is about institutional mindset. Universities and schools that succeed in this transformation approach AI as an educational partner rather than a disciplinary threat.

Institutions need clear and consistent policies that articulate how AI can ethically support teaching and learning. According to the TeachAI Policy Toolkit (2025), successful AI inclusion begins with three pillars: transparency, training, and trust. These principles ensure that both educators and students understand the boundaries and opportunities of AI in education. Transparent policy prevents confusion, training builds confidence, and trust fosters open communication.

Institutional steps toward AI readiness

Administrators can start with structured actions that build a coherent framework for AI use:

- Develop clear policy statements: Define acceptable and unacceptable uses of AI tools across academic levels.

- Offer professional development: Provide workshops where teachers learn how to integrate AI into lesson design and evaluation.

- Support transparency mechanisms: Introduce AI disclosure forms or reflection logs within assignments.

Equally important is communication. Students should understand that responsible AI use aligns with institutional values of integrity and curiosity. Instead of relying on punitive responses, schools should celebrate ethical innovation and experimentation. The UNESCO AI in Education Report (2024) emphasized that institutions embracing collaborative policy making between teachers, administrators, and students achieve smoother implementation and stronger trust.

Creating this culture also involves recognizing the diversity of access to AI tools. Equity initiatives—such as providing institutional subscriptions or offering training for underrepresented groups—ensure that every student can learn within the same technological environment. Inclusion is not only ethical; it prevents new digital divides from forming.

When an institution models responsible AI use, students learn through culture as much as curriculum. A unified policy framework signals that AI literacy is an academic expectation and a professional necessity. Over time, this coherence replaces confusion with confidence and fear with informed participation.

Equity ethics and access when students use AI

Students using AI do not experience technology equally. Access to advanced AI platforms often depends on socioeconomic status, institutional resources, or regional infrastructure. Without thoughtful intervention, AI adoption risks widening educational inequalities rather than narrowing them. True progress means ensuring that every learner can engage with AI tools safely, affordably, and critically.

Ethical education around AI should therefore start with fairness. Teachers must discuss not only how to use AI responsibly but also who gets to use it and under what conditions. The UNESCO (2024) guidance on AI ethics emphasizes equity as a foundational principle, urging schools to integrate access policies that prevent exclusion or bias. If AI literacy becomes a privilege, the gap between well supported and under resourced students will only grow.

Promoting fairness and accessibility

Institutions can make AI education equitable through a combination of policy and practice. Useful approaches include:

- Provide shared AI tools: Offer campus licenses or open access alternatives so every student can participate equally.

- Address language and cultural bias: Encourage students to test AI outputs for stereotyping or misrepresentation and discuss findings in class.

- Integrate ethics across curricula: Embed fairness and bias awareness in assignments, case studies, and group projects.

AI ethics also extends to data privacy. Students should know what personal information tools collect and how to protect it. The OECD report on AI in Education (2024) warns that uninformed consent or data misuse can erode trust in digital learning systems. Transparent discussion of these topics strengthens students’ digital citizenship and critical awareness.

Teaching ethical reasoning through AI

AI offers a valuable opportunity to teach ethics through practice. Students can explore case studies where algorithmic bias affected hiring, healthcare, or justice systems and then reflect on educational parallels. When learners identify moral implications themselves, they develop empathy and accountability. As Wingate and Pereira (2024, Teaching in Higher Education) found, embedding ethical reflection into classroom activity leads to measurable gains in civic responsibility and critical judgment.

Equity and ethics are inseparable in AI education. Ensuring equal access, promoting fairness, and discussing responsibility safeguard education from becoming a tool of exclusion. When students understand not only how AI works but also who benefits from it and why, learning becomes both inclusive and transformative.

Case studies and example frameworks for guiding students using AI

Students using AI can benefit most when institutions apply structured, research-based frameworks instead of ad-hoc rules. Across the globe, universities and schools are experimenting with innovative approaches that integrate AI responsibly into teaching and assessment. These case studies show that the most effective models share a common pattern: transparency, collaboration, and critical reflection.

One example comes from the TeachAI Global Toolkit (2025), developed by international partners including UNESCO, the World Economic Forum, and the International Society for Technology in Education. The framework emphasizes developing clear learning outcomes for AI literacy, aligning them with existing curriculum goals. It recommends embedding AI ethics, practical use, and critical evaluation within all academic disciplines rather than isolating them in computer science courses.

Examples of AI integration in teaching and education

Several institutions have become early adopters of AI-integrated learning models:

- University of Sydney (Australia): Introduced “AI Aware Writing” modules where students use AI for brainstorming and critique, with reflective commentary counting toward the grade.

- University of Michigan (USA): Launched an “AI Literacy and Integrity” program requiring disclosure of AI use in all written assignments, accompanied by student self-evaluations of their process.

- ETH Zurich (Switzerland): Developed AI audit assignments where students examine AI outputs for bias or misinformation, linking digital literacy with ethics education.

- University College London (UK): Runs interdisciplinary workshops where educators co-design AI-based activities for law, medicine, and humanities, showing how principles can adapt across subjects.

These programs demonstrate that teaching AI responsibly does not require additional workload but a strategic redesign of existing courses. When AI tools are used to support reflection, fact-checking, and iteration, they reinforce rather than replace human learning.

Building adaptable frameworks

Educators can draw from these examples to develop local frameworks that fit their institutional goals. Effective models typically include:

- Policy alignment: Clarify institutional values and expected conduct for AI use.

- Teacher empowerment: Provide educators with continuous training and shared resources for AI literacy.

- Student participation: Involve learners in discussions about fairness, bias, and ethical standards.

- Evaluation transparency: Make criteria for grading AI-supported work explicit from the beginning.

As the OECD (2024) concludes, institutions that build participatory frameworks—where teachers and students co-develop guidelines—achieve higher trust and better learning outcomes. The message is clear: successful AI education depends not only on rules but on relationships. When educators invite students into the process, both sides learn how to think more critically about the tools shaping modern knowledge.

Conclusion and checklist for teachers, professors, and institutions

Students using AI are reshaping what it means to teach, learn, and assess. The challenge for educators and institutions is not to control this change but to guide it with structure, empathy, and evidence. Artificial intelligence is here to stay, and the way it integrates into education will define the next generation of learning. With thoughtful strategy, schools can transform AI from a source of anxiety into a catalyst for deeper understanding and collaboration.

Research from Luckin et al. (2023, Phi Delta Kappan) emphasizes that long-term success with AI in education depends on two intertwined priorities: building teacher confidence and designing ethical learning environments. This dual focus ensures that technology serves pedagogy, not the other way around. Institutions that invest in professional development, curriculum redesign, and ethical awareness will cultivate generations of students who use AI to learn responsibly rather than to replace effort.

Embedding responsible AI into everyday practice

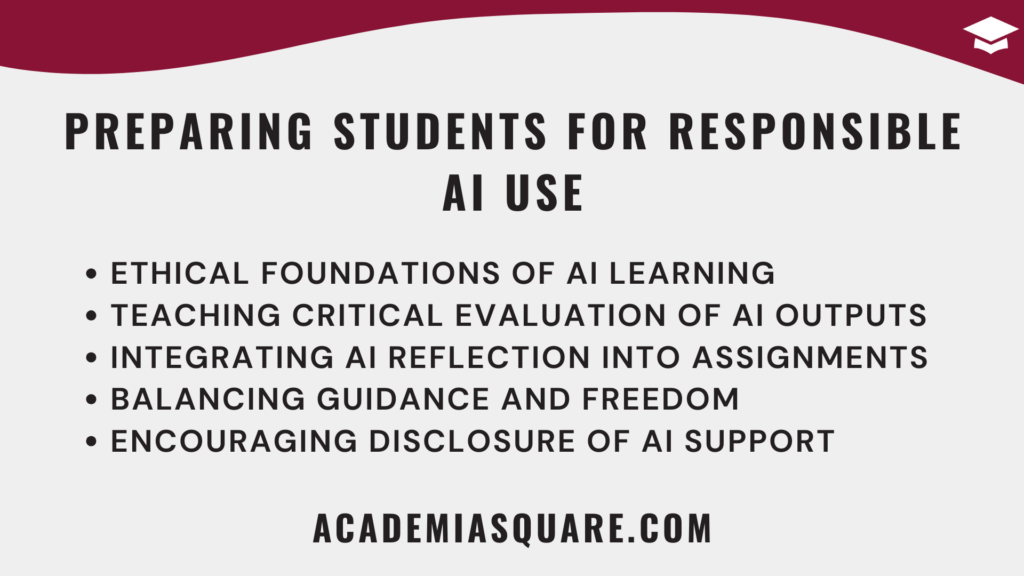

To move from discussion to action, educators can use a structured approach built around five principles:

- Transparency: Require AI disclosure in all written or digital submissions.

- Equity: Provide equal access to AI tools and training for all students.

- Reflection: Encourage critical thinking about how AI shapes learning and problem-solving.

- Integration: Embed AI ethics and literacy across curricula rather than isolating it as a special topic.

- Evaluation reform: Design assessments that reward reasoning, collaboration, and originality.

AI is no longer optional in education—it is integral. The most successful teachers and institutions will be those who teach both the use and the understanding of AI. Students who master that balance will enter the workforce prepared to lead, innovate, and think critically in an increasingly intelligent world.

Educators and institutions stand at a turning point. Those who choose to guide rather than prohibit will lead the way into a smarter, more equitable era of education. By turning policy into practice and fear into curiosity, schools can transform AI from a disruption into a lifelong tool for growth, creativity, and responsible knowledge creation.

Sources and Recommended Reading on Students Using AI

- Mansoor HMH. “Artificial intelligence literacy among university students—a cross-national study.” Frontiers in Communication 2024.

- Zhou X. “Defining, enhancing, and assessing artificial intelligence in K-12 and higher education contexts: a systematic review.” Interactive Learning Environments 2025.

- Yang Y. “Navigating the landscape of AI literacy education: insights and frameworks.” Humanities and Social Sciences Communications 2025.

- Stanford Teaching Commons. “Understanding AI Literacy.” Stanford University (online teaching guide).

- Hornberger M et al. “A multinational assessment of AI literacy among university students in Germany, the UK and the US.” 2025.

- Steelman J. “On AI and Education.” 2025 (essay on AI integration and student learning).

- OECD. “Artificial Intelligence in Education: Policy Challenges and Opportunities.” OECD Publishing, 2024.

- Hyland K. “The role of genre and writing process in second-language writing.” Journal of Second Language Writing 2019.

- Dunlosky J et al. “Improving Students’ Learning with Effective Learning Techniques.” Psychological Science in the Public Interest 2013.

- AcademiaSquare. “How to Read Critically: Complete Guide for Beginners.” 2025.

- AcademiaSquare. “The Best Study Techniques According to Research (2025).”

FAQs on Students Using AI

Should teachers prohibit students from using AI tools?

Prohibiting AI use tends to backfire. Studies published in the Journal of Teacher Education (2023) show that restrictive policies reduce transparency and drive unregulated use. Instead, structured AI literacy programs improve academic integrity and learning quality by teaching responsible usage rather than enforcing bans.

Are AI detection tools reliable for identifying AI-generated student work?

AI detection tools are not fully reliable. Research by Liang et al. (2024) found that detection algorithms produced false positives in up to 15% of human-written essays and failed to catch over 20% of AI-generated ones. This inaccuracy often leads to unfair penalties, especially for non-native speakers.

How can institutions encourage responsible AI use among students?

Institutions can create AI policies emphasizing transparency, ethics, and fairness. The TeachAI Global Policy Toolkit (2025) recommends integrating AI literacy in curricula, training faculty, and encouraging students to document their AI use as part of reflective learning.

How should homework and grading change in the age of AI?

Homework should remain but count for a smaller percentage of final grades—around 10% to 20%. In-class assessments such as discussions, debates, and collaborative projects provide better indicators of understanding. Research from the Journal of Computer Assisted Learning (2023) supports diversified evaluation methods to maintain fairness in AI-assisted environments.

Can students learn better by combining AI tools with traditional methods?

Yes. Combining AI assistance with independent reasoning strengthens metacognitive awareness and self-regulated learning. A 2023 study in the Written Communication journal showed that students who analyzed AI feedback improved their writing coherence and critical thinking by up to 25%.

What are the main ethical concerns when students use AI?

The main ethical concerns include plagiarism, lack of attribution, misinformation, and bias. According to the UNESCO AI Ethics in Education Report (2024), institutions must promote transparency, data privacy, and awareness of algorithmic bias to ensure responsible academic use.

How can teachers assess learning when students use AI tools?

Teachers can shift focus from written output to reasoning, oral defense, and reflection. In-class presentations, peer review, and collaborative analysis help evaluate comprehension. The EdTech Magazine (2025) notes that schools using mixed evaluation formats saw improved engagement and more authentic student performance.

Should AI be compared to calculators or computers in education?

Yes, responsibly integrating AI is comparable to how calculators and computers were introduced. Each tool initially faced resistance but became essential for problem-solving. As Holmes et al. (2023, Educational Review) explain, teaching how to use AI critically rather than banning it fosters long-term digital literacy and analytical skills.

What classroom activities help students use AI responsibly?

One effective activity is the “AI Reflection Exercise,” where students write an essay independently, then use AI for feedback, and analyze the changes suggested. This helps them understand their writing patterns and critical thinking gaps. Research in the Journal of Writing Research (2023) found that this reflective method enhances accuracy and confidence in revision.

How can universities support equity in AI access?

Universities can provide shared AI tools, open-source platforms, and faculty training to ensure fair access. The Computers in Human Behavior (2023) study confirmed that equitable AI access correlates with improved student confidence and performance across socioeconomic groups.

What long-term skills can students gain from using AI in learning?

When used responsibly, AI fosters critical evaluation, self-reflection, and adaptability—skills essential for lifelong learning. The Written Communication journal (2022) found that iterative feedback loops using AI improved argumentation and cognitive flexibility among university students.

How can teachers stay informed about AI developments in education?

Teachers can follow research from organizations such as UNESCO, OECD AI Observatory, and academic journals including Computers and Education and Learning and Instruction. Continuous professional development in AI literacy helps educators maintain ethical and effective teaching practices.